Ripple

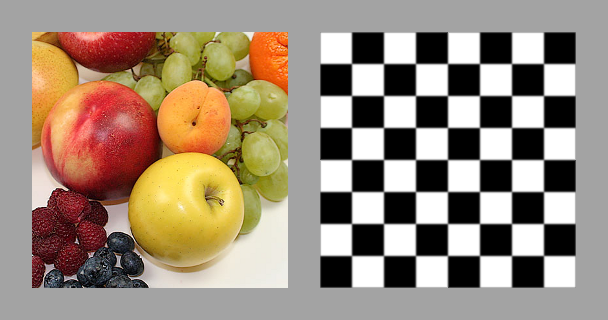

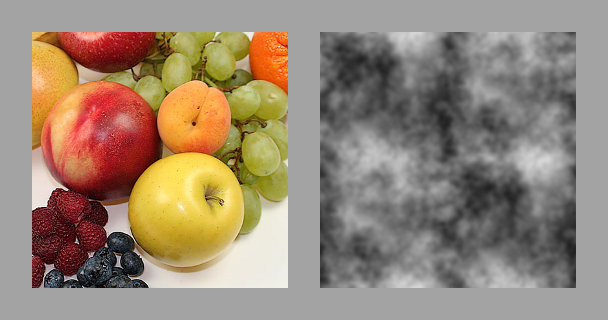

Examples

The Ripple

program takes two images as input: an image to be displaced

and an image to be used as a displacement map, and displays

a textured polygon with an animated "rippling" texture. The

texture is animated by modifying the texture coordinates for

the displacement map over time. It is possible to obtain a

huge range of interesting effects just by using different

displacement maps.

Program

The program depends on a set of very simple shaders. The

shader_uv.v

and shader_uv.f

shaders do trivial vertex transformation and texturing. These programs

are completely standard fare and only implement the bare

minimum necessary to draw textured polygons.

The main displacement work takes place in

shader_displace.f:

#version 110

varying vec2 vertex_uv;

uniform sampler2D texture;

uniform sampler2D displace_map;

uniform float maximum;

uniform float time;

void

main (void)

{

float time_e = time * 0.001;

vec2 uv_t = vec2(vertex_uv.s + time_e, vertex_uv.t + time_e);

vec4 displace = texture2D(displace_map, uv_t);

float displace_k = displace.g * maximum;

vec2 uv_displaced = vec2(vertex_uv.x + displace_k,

vertex_uv.y + displace_k);

gl_FragColor = texture2D(texture, uv_displaced);

}

The program takes the current texture coordinates (interpolated by

the current vertex shader), a texture, a displacement map, a

scaling value (maximum), and

the current time (in frames, but the time unit is not important).

First, the current texture coordinates vertex_uv

are translated by the current scaled time value

time_e. Then, a pixel is

read from the displacement map at the resulting texture coordinates.

The displacement map is assumed to be greyscale. Pixels are represented

as four element RGBA vectors with floating point components. The shader

reads the green channel of the pixel (but would of course get identical

results reading from either the red or blue channels with a greyscale image),

and then scales this value by maximum to

obtain a final offset value displace_k.

Note that this value is in texture-space units, not pixels - in a

256x256 pixel

image, a value of 0.25 would represent

64 pixels. The program then

adds displace_k to the original

interpolated texture coordinates and then retrieves a pixel from the

current texture using the coordinates.

The OpenGL program that drives the shaders is similarly simple. First,

the program allocates a framebuffer and adds a blank texture as color

buffer storage. It loads the requested image and displacement map

image, and also compiles and loads the relevant shading programs. These

uninteresting but essential functions are implemented in the

Utilities

class.

Ripple(

final String image,

final String displace)

throws IOException

{

this.texture_image = Utilities.loadTexture(image);

this.texture_displacement_map = Utilities.loadTexture(displace);

this.framebuffer_texture =

Utilities.createEmptyTexture(

Ripple.TEXTURE_WIDTH,

Ripple.TEXTURE_HEIGHT);

this.framebuffer = Utilities.createFramebuffer(this.framebuffer_texture);

this.shader_uv = Utilities.createShader("dist/shader_uv.v", "dist/shader_uv.f");

this.shader_displace =

Utilities.createShader("dist/shader_uv.v", "dist/shader_displace.f");

}

Rendering involves two steps. First, the program needs to generate

a texture based on the loaded image and displacement map. It does this

by binding the allocated framebuffer and then rendering a fullscreen

textured quad using the previously mentioned

displacement shader.

private void renderToTexture()

{

GL11.glMatrixMode(GL11.GL_PROJECTION);

GL11.glLoadIdentity();

GL11.glOrtho(0, 1, 0, 1, 1, 100);

GL11.glMatrixMode(GL11.GL_MODELVIEW);

GL11.glLoadIdentity();

GL11.glTranslated(0, 0, -1);

GL11.glViewport(0, 0, Ripple.TEXTURE_WIDTH, Ripple.TEXTURE_HEIGHT);

GL11.glClearColor(0.25f, 0.25f, 0.25f, 1.0f);

GL11.glClear(GL11.GL_COLOR_BUFFER_BIT);

GL30.glBindFramebuffer(GL30.GL_FRAMEBUFFER, this.framebuffer);

{

GL13.glActiveTexture(GL13.GL_TEXTURE0);

GL11.glBindTexture(GL11.GL_TEXTURE_2D, this.texture_image);

GL13.glActiveTexture(GL13.GL_TEXTURE1);

GL11.glBindTexture(GL11.GL_TEXTURE_2D, this.texture_displacement_map);

GL20.glUseProgram(this.shader_displace);

{

final int ut = GL20.glGetUniformLocation(this.shader_displace, "texture");

final int udm = GL20.glGetUniformLocation(this.shader_displace, "displace_map");

final int umax = GL20.glGetUniformLocation(this.shader_displace, "maximum");

final int utime = GL20.glGetUniformLocation(this.shader_displace, "time");

GL20.glUniform1i(ut, 0);

GL20.glUniform1i(udm, 1);

GL20.glUniform1f(umax, 0.2f);

GL20.glUniform1f(utime, this.time);

Utilities.checkGL();

GL11.glBegin(GL11.GL_QUADS);

{

GL11.glTexCoord2f(0, 1);

GL11.glVertex3d(0, 1, 0);

GL11.glTexCoord2f(0, 0);

GL11.glVertex3d(0, 0, 0);

GL11.glTexCoord2f(1, 0);

GL11.glVertex3d(1, 0, 0);

GL11.glTexCoord2f(1, 1);

GL11.glVertex3d(1, 1, 0);

}

GL11.glEnd();

}

GL20.glUseProgram(0);

GL13.glActiveTexture(GL13.GL_TEXTURE0);

GL11.glBindTexture(GL11.GL_TEXTURE_2D, 0);

GL13.glActiveTexture(GL13.GL_TEXTURE1);

GL11.glBindTexture(GL11.GL_TEXTURE_2D, 0);

}

GL30.glBindFramebuffer(GL30.GL_FRAMEBUFFER, 0);

Utilities.checkGL();

}

After the above function has executed,

framebuffer_texture

contains the "displaced" texture. The program then draws

a textured quad to the screen:

private void renderScene()

{

GL11.glMatrixMode(GL11.GL_PROJECTION);

GL11.glLoadIdentity();

GL11.glFrustum(-1, 1, -1, 1, 1, 100);

GL11.glMatrixMode(GL11.GL_MODELVIEW);

GL11.glLoadIdentity();

GL11.glTranslated(0, 0, -1.25);

GL11.glRotated(30, 0, 0, 1);

GL11.glViewport(0, 0, Ripple.SCREEN_WIDTH, Ripple.SCREEN_HEIGHT);

GL11.glClearColor(0.25f, 0.25f, 0.25f, 1.0f);

GL11.glClear(GL11.GL_COLOR_BUFFER_BIT);

GL20.glUseProgram(this.shader_uv);

{

GL13.glActiveTexture(GL13.GL_TEXTURE0);

GL11.glBindTexture(GL11.GL_TEXTURE_2D, this.framebuffer_texture);

Utilities.checkGL();

final int ut = GL20.glGetUniformLocation(this.shader_uv, "texture");

GL20.glUniform1i(ut, 0);

Utilities.checkGL();

GL11.glBegin(GL11.GL_QUADS);

{

GL11.glTexCoord2f(0, 0);

GL11.glVertex3d(-0.75, 0.75, 0);

GL11.glTexCoord2f(0, 1);

GL11.glVertex3d(-0.75, -0.75, 0);

GL11.glTexCoord2f(1, 1);

GL11.glVertex3d(0.75, -0.75, 0);

GL11.glTexCoord2f(1, 0);

GL11.glVertex3d(0.75, 0.75, 0);

}

GL11.glEnd();

}

GL20.glUseProgram(0);

Utilities.checkGL();

}

It is, of course, possible to render directly to the screen using

the displacement shader. The program described here avoids doing that

in order to demonstrate that the resulting procedural texture is an

ordinary texture that can be used in the same manner as any other.